Grounding LLMs: The Knowledge Graph foundation every AI project needs

“Mr. Schwartz, I’ve reviewed your opposition brief,” Federal Judge P. Kevin Castel began, his tone measured but pointed. “You cite six cases in support of your client’s position. I’d like to discuss Varghese v. China Southern Airlines.”

Steven Schwartz, a lawyer with decades of experience, straightened in his chair. “Yes, Your Honour. That’s an Eleventh Circuit decision from 2019 that directly supports — “

“I’m having difficulty locating it,” the judge interrupted. “The citation you provided — 925 F.3d 1339 — doesn’t appear in any database my clerks have checked. Can you provide the court with a copy of the full opinion?”

Schwartz felt the first flutter of concern. “Of course, Your Honour. I’ll submit it promptly.” Back at his office, Schwartz returned to his source. He typed into ChatGPT: “Is Varghese v. China Southern Airlines, 925 F.3d 1339 (11th Cir. 2019) a real case?” The response came back confidently: “Yes, Varghese v. China Southern Airlines, 925 F.3d 1339 is a real case. It can be found on reputable legal databases such as LexisNexis and Westlaw.”

Reassured, Schwartz asked ChatGPT for more details about the case. The AI obligingly generated what appeared to be excerpts from the opinion, complete with convincing legal reasoning and properly formatted quotes.

He submitted these to the court.

Three weeks later

Judge Castel’s order was scathing: “The Court is presented with an unprecedented circumstance. Six of the submitted cases appear to be bogus judicial decisions with bogus quotes and bogus internal citations” [Legal Dive].

All six cases were completely fabricated. They had never been decided by any court. They didn’t exist.

In his subsequent affidavit, Schwartz admitted that he had “never previously used ChatGPT for conducting legal research and therefore was unaware of the possibility that its contents could be false” [Legal Dive]. He told the court he thought ChatGPT was “like, a super search engine” [Fortune] — a reasonable but catastrophically wrong assumption shared by millions of professionals deploying LLMs across industries today.

What went wrong?

The Schwartz case reveals a fundamental misunderstanding about what LLMs can and cannot do. There’s a world of difference between asking ChatGPT “What is the Taj Mahal?” and asking it “What legal precedents support my client’s position in an aviation injury case?”

The first query requires general knowledge — information widely available and relatively stable. The second requires access to a specific, authoritative, evolving corpus of legal decisions built up over centuries of jurisprudence, where precision matters and every citation must be verifiable.

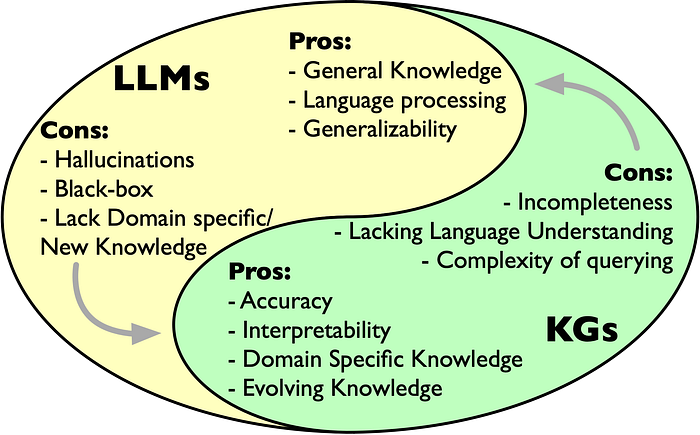

We know LLMs hallucinate. This isn’t news, and substantial effort has gone into mitigating the problem. Techniques like reinforcement learning from human feedback (RLHF), improved training data curation, and confidence scoring have all helped. But context matters enormously. An LLM might perform admirably when asked about general topics, yet fail catastrophically when tasked with domain-specific queries requiring authoritative sources.

Retrieval-augmented generation (RAG) approaches — where you take documents, split them into chunks, and retrieve relevant passages on demand — can partially address this. RAG works reasonably well when you have textual content and need specific answers grounded in that content. But when your knowledge base is the result of years of accumulated practice — legal precedents, medical protocols, financial regulations, engineering standards — simple chunk-based retrieval cannot provide the precision and contextual understanding required. You need to know not just what a case says, but how it relates to other cases, when it applies, what jurisdiction it covers, and whether subsequent decisions have modified its standing.

Yet hallucinations and retrieval limitations represent only one dimension of the problem. The architectural challenges run deeper:

- Their knowledge is opaque: Information is stored as billions of parameters that cannot be inspected or explained. You cannot audit what the model “knows” or verify its sources.

- They cannot easily update: Incorporating new information — a fresh legal precedent, updated regulation, or revised medical guideline — requires expensive retraining or complex fine-tuning.

- They lack domain grounding: General-purpose LLMs miss the expert knowledge, business rules, and regulatory requirements that determine whether outputs are actually useful in professional contexts.

- They provide no audit trail: It’s impossible to trace how they reached conclusions, making them unsuitable for contexts requiring accountability.

These aren’t minor technical issues. They’re architectural problems that determine whether AI projects succeed or fail. According to Gartner, more than 40% of agentic AI projects will be cancelled by 2027 due to poor alignment on domain knowledge and ROI. The reason is consistent: organisations are deploying powerful LLM technology without the knowledge infrastructure necessary to make it trustworthy.

The Schwartz case makes this crystal clear: LLMs alone cannot function as reliable question-answering tools for critical applications unless they have proper access to real, consistent, verifiable data. And there’s no shortcut. Simply throwing more documents at an LLM through RAG, or hoping better prompting will compensate, misses the fundamental issue.

Knowledge must be organised in a way that is manageable, always up-to-date, properly maintained, and — crucially — structured to support the types of reasoning your application requires. The real question isn’t whether LLMs are powerful enough. It’s what structure should knowledge have, and how do we create processes around it to build, maintain, and access it properly?

This is where knowledge graphs enter the picture.

What are Knowledge Graphs?

A knowledge graph is more than a database. As we define in Knowledge Graphs and LLMs in Action:

A knowledge graph is an ever-evolving graph data structure composed of a set of typed entities, their attributes, and meaningful named relationships. Built for a specific domain, it integrates both structured and unstructured data to craft knowledge for humans and machines.

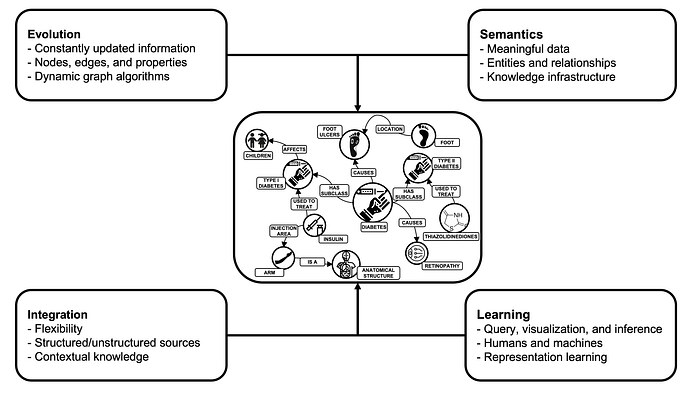

Hence, KGs rest on four foundational pillars:

- Evolution: Constantly updated information that seamlessly incorporates new data without structural overhauls

- Semantics: Meaningful data representation through typed entities and explicit relationships that capture domain knowledge

- Integration: Flexibility to harmonise structured and unstructured sources from multiple origins

- Learning: Support for querying, visualisation, and inference by both humans and machines

Crucially, KG knowledge is auditable and explainable — users can trace exactly where information came from and verify it against authoritative sources.

Intelligent Advisor Systems vs Autonomous Systems

Before exploring how to combine these technologies, we need to understand a critical distinction in how intelligent systems should be deployed.

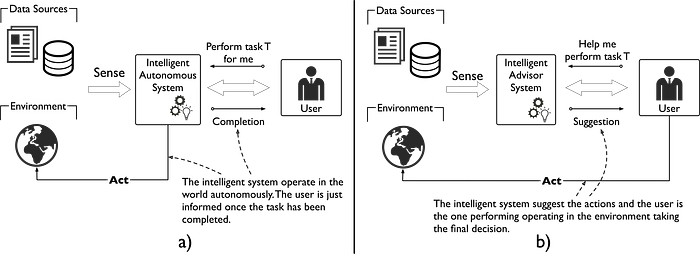

Not all intelligent systems are created equal. Intelligent autonomous systems act independently, making decisions and executing actions on behalf of users with minimal human input — think self-driving vehicles that must operate in real-time without human intervention.

Intelligent advisor systems (IASs), by contrast, are designed to support rather than replace human judgement. As we define in Knowledge Graphs and LLMs in Action:

An intelligent advisor system’s role is to provide information and recommendations. Key features include decision support, context awareness, and user interaction. These systems are designed for easy interaction, allowing users to explore options, ask questions, and receive detailed explanations to aid their decision-making.

For critical applications — legal research, medical diagnosis, financial analysis, compliance monitoring — advisor systems that augment rather than replace human expertise are not just preferable, they’re essential. The architecture must enforce gatekeeping responsibilities, not bypass them.

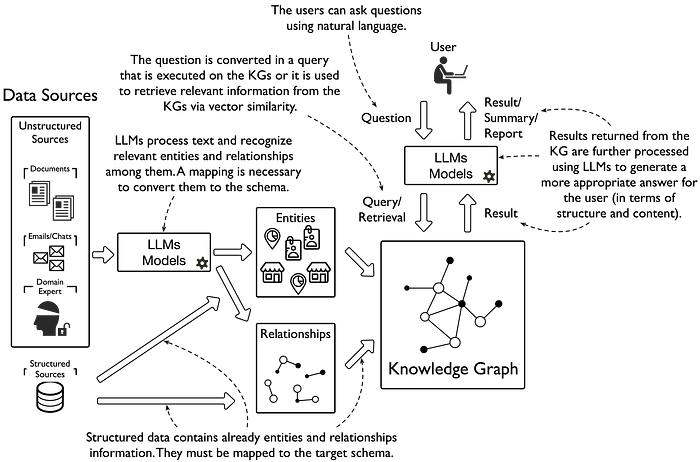

The hybrid approach: LLMs + KGs

When we combine KGs and LLMs, we create systems where the whole exceeds the sum of its parts:

KGs provide the foundation:

- Structured, verified knowledge that acts as factual grounding

- Explicit representation of domain rules and constraints

- Audit trails showing how conclusions were reached

- Dynamic updating without model retraining

LLMs provide the interface:

- Natural language query processing

- Automated entity extraction from unstructured data to build KGs

- Translation of complex graph queries into accessible language

- Summarisation of results into human-readable reports

Consider how this would have prevented Schwartz’s disaster. A hybrid system would:

- Use the LLM to process natural language queries

- Query the KG for verified information with real citations and provenance

- Present results with context: “Found 12 verified cases with citations from authoritative databases”

- Provide verification links to actual sources

- Flag uncertainty: “No cases found matching this exact pattern. Consider these alternatives.”

Most critically: when asked “Is this case real?”, the system would respond: “This case citation cannot be verified in authoritative databases. Status: UNVERIFIED.”

The comprehensive value proposition

Research from industry leaders consistently demonstrates that hybrid systems address the core challenges causing AI project failures:

- Hallucinations are mitigated by grounding LLM responses in verifiable KG curated facts.

- Knowledge stays current through dynamic KG updates. The LLM accesses up-to-date information through the evolving KG without requiring retraining.

- Explainability is built-in through transparent information paths.

- Domain-specific accuracy improves because KGs encode expert knowledge, regulations, and relationships that general-purpose LLMs lack.

Building AI systems worthy of trust

The judge in Schwartz’s case noted that “technological advances are commonplace, and there is nothing inherently improper about using a reliable artificial intelligence tool for assistance,” but emphasised that “existing rules impose a gatekeeping role on attorneys to ensure the accuracy of their filings” [Fortune].

This principle applies universally: every professional deploying AI has a gatekeeping responsibility. The question is whether your AI systems are architected to support that responsibility or undermine it.

The future of AI in critical applications — across every industry — depends on building intelligent advisor systems that combine the structured knowledge and explainability of KGs with the natural language understanding and pattern recognition of LLMs. This isn’t about choosing between technologies. It’s about understanding that LLMs alone lack the foundation necessary for trustworthy AI. Knowledge graphs provide that foundation.

When organisations deploy LLMs without this grounding, projects fail — not because the technology isn’t powerful, but because power without foundation is unreliable. When done properly — combining technologies that complement each other’s strengths and compensate for each other’s weaknesses — we create systems that genuinely augment human intelligence.

In Knowledge Graphs and LLMs in Action, we provide comprehensive guidance on building these hybrid systems: from modelling KG schemas and using LLMs for entity extraction, to creating conversational AI that answers domain-specific questions with both accuracy and explainability. The book walks through concrete implementations, demonstrating how to architect intelligent advisor systems that organisations can actually trust and users will actually adopt.

The architectural choice is yours: deploy LLMs on unstable ground and risk joining the failed projects, or build them on the knowledge graph foundation that makes AI trustworthy, explainable, and genuinely valuable.

Steven Schwartz learned this lesson the hard way. You don’t have to.

Knowledge Graphs and LLMs in Action by Alessandro Negro, Giuseppe Futia, Vlastimil Kus, and Fabio Montagna is available from Manning Publications.

Listen To The Article

Black Friday 30%

Offer